Earlier this year, the European Union has taken a pioneering step with the adoption of the EU AI Act, the world’s first comprehensive AI regulation, which is part of a wider package of policies to support development of trustworthy AI, which also includes the AI Innovation Package and the Coordinated Plan on AI.

The European Parliament voted on March 13, 2024 to approve the AI Act by a majority of 523 votes in favor, 46 against, and 49 abstentions (see the complete AI Act here as pdf). The purpose of this big piece of legislation is that organizations that use AI to make decisions do not discriminate against people or that these systems are not biased against certain groups based on race, gender, religion, or any other attribute.

The Act will enter into force 20 days after its soon to be expected formal adoption by the European Council and subsequent publication in the EU Official Journal. This may be end of May or early June 2024.

The core of the legislation is a categorization of AI systems based on risk and according obligations for AI providers to protect user data and privacy. By such measures, the European Union seeks to balance AI innovation with protecting public interests, particularly transparency, data protection, and compliance. It is widely expected that the EU AI Act will greatly influence AI regulation in other jurisdictions and by that legal practices and tech developments worldwide.

OVERVIEW OF THE EU AI ACT

Definition of Machine Learning

Firstly, the EU AI Act defines “AI” under Article 3 as follows:

A machine-based system designed to operate with varying levels of autonomy that may exhibit adaptiveness after deployment and that, for explicit or implicit objectives, infers from the input it receives, how to generate outputs such as predictions, content, recommendations or decisions that can influence physical or virtual environments.

This definition will promote the EU AI Act’s acceptance and harmonization, as it corresponds to the definition of the OECD, the Organization for Economic Cooperation and Development.

In other words, any machine learning model, system, or application that produce output that may influence conditions or decisions in the real world, particularly user’s physical or digital conditions, falls under the Act. Examples of influencing such human conditions are e.g. the following:

- deciding on or manage risk levels, such as credit risks, legal risks, health risks;

- determining the content user’s are presented, e.g. in a news feed or as ads or in form of AI-generated texts;

- recommending products, services, medical treatments, legal strategies, etc.

- determining prices for products shown to people based on personal data.

That is, any influence of an EU citizen by an ML learning model, system, or application whatsoever will be required to adhere to the AI Act.

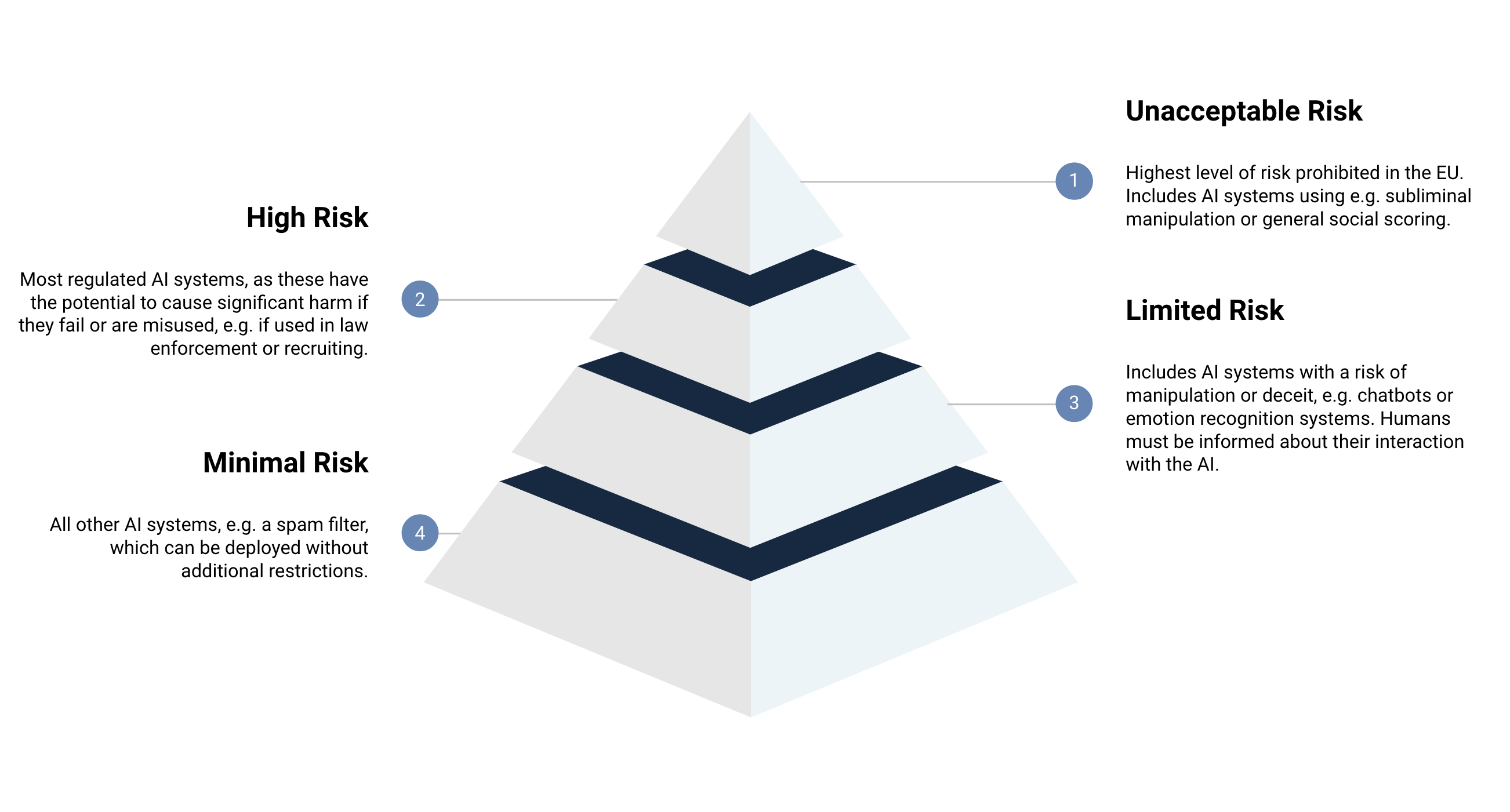

Risk Classification and Regulation

Providers and users are subject to distinct regulatory obligations based on the perceived risk level of the AI technology they engage with, ensuring accountability and compliance across the board. To mitigate risks of AI system, the EU AI Act introduces a risk classification scheme to evaluate the potential impact of an AI system on individual users and the society at large. This classification encompasses the following primary categories.

- Unacceptable risk AI systems (see Art. 5 AI Act);

- High-risk AI systems (see Art. 6 AI Act);

- Limited risk AI systems;

- Minimal risk AI systems.

Before we look into these categories it seems noteworthy that studies found that 18% of AI/ML systems fall into the high-risk category, 42% into the low-risk category, and for 40%, it is unclear whether they fall into the high-risk category or not.

Unacceptable Risk AI Systems

AI systems deemed to pose an unacceptable risk are those that threaten the well-being and rights of individuals. These include the following instances

- manipulation of behavior;

- manipulation and exploitation of vulnerable groups;

- biometric categorization;

- social scoring;

- biometrical real-time remote identification;

- predictive policing;

- untargeted scraping of biometrical data;

- emotional recognition.

With a few exceptions granted for law enforcement purposes under controlled circumstances, the use of such systems is prohibited.

High-Risk AI Systems

Under Art. 6 (1) AI Act, high-risk AI systems are identified as those that pose significant threats to the health and safety of individuals or have a substantial impact on fundamental rights. This particularly covers AI technology deployed in products falling under existing EU product safety regulations such as civil aviation, vehicle security, marine equipment, toys, lifts, etc.

Additionally, Annex III AI Act lists explicit instances of high-risk AI systems, including the following:

- Biometrics;

- Critical infrastructure;

- Education and vocation training;

- Employment, workers management and access to self-employment;

- Access to and enjoyment of essential private services and essential public services and benefits;

- Law enforcement;

- Migration, asylum and border control management;

- Administration of justice and democratic processes.

Limited and Minimal Risk AI Systems

Limited Risk: This category includes AI systems with a risk of manipulation or deceit. AI systems falling under this category must be transparent, meaning humans must be informed about their interaction with the AI (unless this is obvious), and any deep fakes should be denoted as such. For example, chatbots classify as limited risk. This is especially relevant for generative AI systems and its content.

Minimal risk: The lowest category includes all other AI systems that do not fall under the above-mentioned categories, such as a spam filter. AI systems under minimal risk do not have any restrictions or mandatory obligations.

RISK CLASSIFICATION OF LEGAL AI APPLICATIONS

Within the legal domain, here’s how various legal AI applications might be categorized under these risk levels, based on their potential impact and use cases:

Unacceptable Risk: AI applications that could potentially violate fundamental rights or raise significant ethical concerns fall under this category. In the legal field, this might include:

- Predictive policing AI systems that could lead to discrimination or unjust profiling.

- AI applications used by public authorities for automated social scoring of individuals, if developed for legal or judicial purposes.

High Risk: Legal AI applications with significant implications for individuals’ rights and safety, but not outright banned, such as the following:

- AI systems used in courtrooms for risk assessment scores that influence sentencing, bail, or parole decisions.

- AI-driven biometric identification systems used in legal investigations or by law enforcement.

- AI applications for automating decisions in critical legal scenarios, such as child custody cases or asylum applications.

Limited Risk: AI systems that necessitate specific transparency obligations, such as

- Legal chatbots that provide legal advice or assistance. Users must be clearly informed they are interacting with an AI system.

- AI-generated legal documents or contracts, where users must be aware of the AI’s involvement in their creation.

Minimal or no risk: In the legal sector, examples could include:

- AI tools for legal research that help find relevant cases and statutes but do not advise on legal outcomes.

- AI systems used for organizing and managing legal documents or schedules, assisting in administrative tasks without making substantive legal decisions.

The specific classification of a legal AI application would depend on its intended use, potential impact on fundamental rights, and the extent to which it influences legal outcomes. As the EU AI Act is implemented and further guidance is provided, more precise classifications for legal AI applications may emerge.

COMPLIANCE REQUIREMENTS AND PENALTIES

The EU AI Act will be enforced by a new EU AI Office, which is established with the European Commission. Its primary role is to oversee and enforce the provisions of the EU AI Act, particularly focusing on general purpose AI (GPAI) models and systems across the 27 EU Member States.

Unacceptable Risk AI Systems: Strictly Prohibited

These systems are strictly prohibited from development, deployment, or use within the European Union. The consequences for non-compliance are severe, with potential fines up to €35 million or up to 7% of the company’s total worldwide annual turnover, whichever is higher.

High-Risk AI: Stringent Safeguards

The compliance requirements of high-risk AI systems are as follows:

- Mandatory risk assessment and mitigation systems;

- High-quality data sets to train and test AI systems;

- Detailed documentation and logging of all activities;

- Transparent information to users;

- Appropriate human oversight to minimize risk;

- Specific attention to cybersecurity and accuracy.

Failure to comply can result in hefty fines up to €15 million or up to 3% of the company’s total worldwide annual turnover.

Limited and Minimal Risk AI: Transparency Obligations

Limited risk AI systems, e.g. chat bots, must clearly communicate to users that they are interacting with an AI and disclose if AI is used to generate or manipulate image, audio, or video content. Non-compliance can lead to fines up to €10 million or up to 2% of the company’s total worldwide annual turnover.

Minimal risk AI applications, such as spam filters generally have no specific obligations or requirements, unless their use or functionality changes and they are reclassified into a higher risk category.

REGULATING GENERAL PURPOSE AI

General-purpose AI (GPAI) models like ChatGPT, Claude, Gemini and others are specifically regulated and classified under the EU AI Act. The Act distinguishes between obligations applicable to all GPAI models and additional requirements for GPAI models with systemic risks. Since models are regulated separately from AI systems, a model alone will not constitute a high-risk AI system, as it is not an AI system itself but only the basis of one.

What is a systemic risk?

The definition of a systemic risk GPAI is laid out under Art. 51 AI Act. Accordingly, a GPAI model is a systemic risk if it demonstrates “high capabilities”, i.e. the cumulative amount of the computing power used for its training is above 10^25 floating point operations per second (FLOPS).

Additionally, the European Commission may designate a GPAI model as having systemic risk based on various factors, such as the model’s complexity of parameters, its input/output modalities, or its reach among businesses and consumers.

Obligations of GPAI model provides

Providers of GPAI models must comply with certain obligations, which can be considered a lighter version of the requirements for AI systems. Among other things, they must create and maintain technical documentation, establish a policy on respecting copyright law, and provide a detailed summary of the content used for training the GPAI model.

Providers of GPAI models with systemic risks have additional obligations, including performing model evaluations, assessing and mitigating systemic risks, documenting and reporting serious incidents to the AI Office and national competent authorities, and ensuring adequate cybersecurity protection.

IMPLEMENTATION OF THE EU AI ACT

The EU AI Act’s phased roll-out strategy is dependent on the entry into force, which, however, can be expected in early June 2024. Here’s a breakdown of the key implementation stages:

- 6 months as of entry into force (likely end 2024): AI systems that fall under the unacceptable risk category will be prohibited, i.e. they must shut down.

- 12 months as of entry into force (likely mid 2025): General purpose AI (GPAI) providers must meet the respective obligations. Further, the EU member states must set up national authorities and the EU Commission will by then have to review the list of prohibited AI.

- 24 months as of entry into force (likely mid 2026): Obligations on high-risk AI systems specifically listed in Annex III must be adhered.

- 36 months as of entry into force (likely mid 2027): Obligations on high-risk AI systems must be adhered that are not listed in Annex III but are used in a product that is required to undergo a conformity assessment under existing specific EU laws, for example toys, radio equipment, in vitro diagnostic medical devices, civil aviation security and agricultural vehicles.

- By end of 2030: Obligations for certain AI systems enter into effect that are components of the large-scale IT systems established by EU law in the areas of freedom, security and justice, such as the Schengen Information System.

Given the timeline above, organizations must actively engage in assessing their AI systems, identifying potential risks, and implementing necessary measures to align with the Act’s stipulations.

CONCLUSION

The AI Act marks a significant milestone for the European Union in its approach to managing the implications and operations of artificial intelligence. This pioneering legislation not only sets a global precedent but particularly establishes a structured environment where AI can flourish and safety, transparency, and equity is ensured as well.

For professionals navigating this terrain—whether lawyers, patent attorneys, or computer scientists—the AI Act offers a clear framework to guide the development and deployment of AI systems within the EU. Staying updated on these regulations will be crucial for ensuring compliance and taking full advantage of the opportunities that AI presents in various sectors.

Don’t miss the opportunity to stay ahead of the AI game. Let us know if you wish to discuss your journey towards compliance and strategic advantage under the EU AI Act. Let us help you navigate this changing landscape with confidence and expertise.

At ALPHALECT.ai, we explore the power of AI to revolutionize the European IP industry, building on decades of collective experience in the industry and following a clear vision for its future. For answers to common questions, explore our detailed FAQ. If you require personalized assistance or wish to learn more about how legal AI can benefit innovators, SMEs, legal practitioners, and innovation and the society as a whole, don’t hesitate to contact us at your convenience.

Pingback:Confidentiality and Client Data Protection in the Age of Legal AI – ALPHALECT.ai